Shortly after I finished developing the BeaST storage system concept and ended testing it in the virtual environment I faced a problem of how to find a two-headed bare-metal server with a bunch of shared drives.

In fact I did not hope to find a platform for this actually quite expensive testing, but I was lucky enough to get a fantastic offer from the Open Analytics guys to take part in their project of testing the BeaST as an open-source reliable storage system for their needs.

One sunny morning Tobias Verbeke, Open Analytics Managing Director, contacted me and showed an amazing equipment specification, that fits the BeaST testing very well.

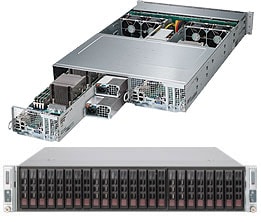

A dual-node server – Supermicro 2028TP-DC1TR 2U:

- 4 x Intel Xeon E5-2630v4

- 16 x Certified 16GB DDR4 2400Mhz ecc reg

- 4 x Intel 150GB S3520

- 2 x Intel i350-T2 Dual 1GbE (oem)

- Onboard 3108 redundant power

- 2 x LSI 9300-8E external HBA card

- 4 x Supermicro CBL-SAST-05731M SAS cable

A drive-enclosure to which the server nodes are connected – Supermicro 847E2C-R1K28JBOD with drives:

- 3 x Seagate ST800FM0173 SAS SSD

- 3 x Supermicro 3.5 to 2.5 bracket

- 24 x HGST 8TB SAS 7200RPM (SMC)

Should I say, I agreed immediately? Together with Ronald Bister, who assisted me from the Open Analytics side, we installed FreeBSD and configured the BeaST storage system as well as the client for testing.

We started with the dual-controller BeaST Classic configured for RAID arrays and CTL HA just to test the concept as a whole and we found out it worked!

As the three SSD drives were not used in that configuration the only tricky thing was to balance all the 24 SAS drives across controllers and not to mix them all together: I had to enable the multipathing for each physical drive as well as to create mirrored and then striped RAID-groups.

Luckily my old GEOM configuration parsing script allowed me to find all the volumes and helped to prepare the right configuration (a bit later I updated it to show SAS-addresses – sas2da.sh and even add location of each drive in the enclosure – sas2da_mpr.sh)

As a client we used a CentOS Linux server which was connected with two 10 Gigabit Ethernet interfaces of the BeaST storage system and an even number of volumes was provided from the BeaST. On the Linux client the drives were configured by using the guide: Configuring Linux server to work with the BeaST storage system over iSCSI protocol.

The BeaST Classic – dual-controller storage system with ZFS and CTL HA configuration was obviously more complex to configure. It took more time to implement everything properly and enable the smooth failover and failback operations. We have finally achieved the stable Active-Active state of the testing system (though for the sake of preventing potential issues on the working system the engineers of Open Analytics decided to use the Active/Hot-Standby mode on the backend-pools) and left the BeaST system to work as the storage system for testing environments at Open Analytics.

So, what do we have at the end of the story?

We have tested the BeaST storage system on the real hardware in both RAID and ZFS configurations. I’m absolutely happy the BeaST performs well and the Failover/Failback operations work normally in both the Active/Active and the Active/Hot-Standby modes. Yet, I have a lot of work to do:

- improve the BeaST Quorum/arbitration subsystem to handle properly all possible hardware failures

- create installation and configuration scripts

- write a user interface

Pingback: Valuable News – 2019/01/25 | 𝚟𝚎𝚛𝚖𝚊𝚍𝚎𝚗